The Department of Homeland Security (DHS) Goal is: “A secure and resilient nation with the capabilities required across the whole community to prevent, protect against, mitigate, respond to, and recover from the threats and hazards that pose the greatest risk.” The Homeland Security Infrastructure Program (HSIP) is a joint effort of National Geospatial-Intelligence Agency, USGS, and the Federal Geographic Data Committee, established the development of Minimum Essential Data Sets (MEDS) to conduct their missions in support of homeland defense and security. The Minimum Essential Data Sets stipulated by DHS as minimal essential datasets are boundaries, orthoimagery, geographic names, transportation, land cover, elevations and hydrography.

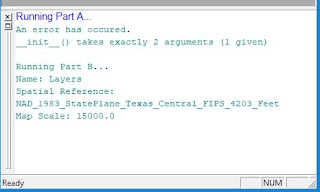

In this week's lab we prepared a geospatial dataset for homeland security planning and operations. Prepare as defined by the Department of Homeland Security (DHS), as "the necessary action to put something into a state where it is fit for use or action, or for particular event of purpose. We were provided data download transportation, national hydrography, landmark data, orthoimagery, elevation, and geographic names data from the National Map Viewer. We created comprehensive and interoperable geospatial database. We identify the Minimum Essential Dataset stipulated by DHS. We manipulate and query spatial data using geoprocessing tools, explore data frame and layer projection properties and join tables to categorize feature classes. We added a color map to layer symbology, convert text and x,y data to feature class, and save group layers as layer files. We did all of this to prepare GIS data for analysis.